Author / fromjia

BLE Hand Flute

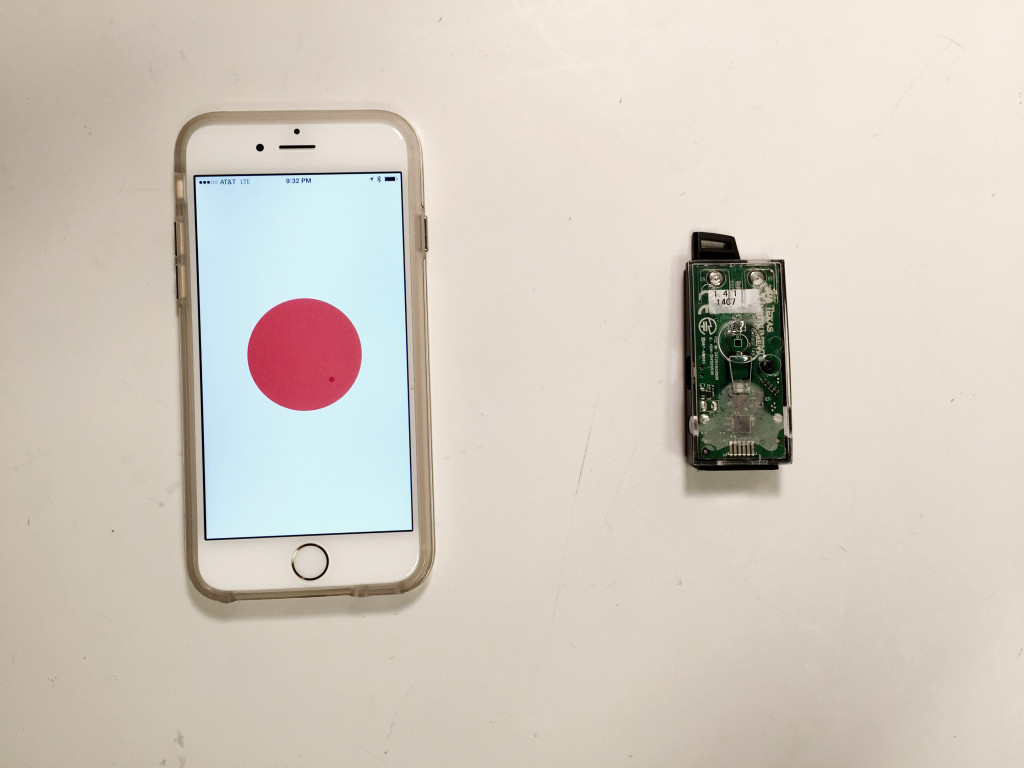

I had meant to post this little project a few weeks ago. This was my first time trying to read sensor values on my phone through BLE connection (and I have loved BLE since). I used the TI Sensor Tag, which has a bunch of sensors on the board. To read any of their values, you have to first turn that sensor on in the code. For the visual and audio response, I used p5 and p5 audio.

The code is here.

(Turn on audio before watching this video)

AOAC final idea: augmenting social interactions

Fish and Carrier Frequencies

What do we experience when we are in the physical presence of other people? Do we experience joy or fear? Can we augment this experience by altering what you see and hear?

These are some of the things I want to explore with my AOAC final project. Jason and I will be working together to create an “augmented social” experience utilizing BLE connections between smartphones and smartphones/beacons. We have two ideas, both involve multiple participants, wearing Cardboard to augment their sight and headphones to augment their hearing.

Fish: The experience we create would simulate a school of fish interacting with each other underwater. Each participant would represent a fish in the experience.

- Each person/fish triggers a different oscillator that has an unique sound.

- As one person/fish gets closer to another person/fish, they would hear the other’s sound and corresponding visuals.

- The visuals they see in Cardboard would be a filtered view from the camera, that changes based on the closeness of other people/fish.

- Each person/fish can also speak into their microphone to add more audio queues to the whole group’s experience.

- The direction the person/fish is facing can also change the audio or visual experience.

Carrier Frequencies: Experiencing other people only through their devices’ frequencies. The details of this idea is similar to the Fish idea, but conceptually focusing on the connection between our devices over our actual social interactions.

- Every participant emits a “carrier” frequency, which has parameters like amplitude, filter frequency.

- Parameters are modulated by proximity to other people. So other people act as “modulators.”

- The frequencies are both sonified and visualized.

Getting technical

To measure the physical relationship between each person/device, we will use BLE connection between devices or connection between devices and iBeacons placed around the room.

We’ve tested with iBeacons. The distance measured is not very accurate, but the audio produced from the frequencies are pretty cool.

I’m also trying to connect devices through BLE. There are two Phonegap plugins (this and this) that would set an iPhone as a peripheral, but I’m not having much luck with them. There are definitely more options in Objective-C. I’ll have to look into this more.

UPDATE:

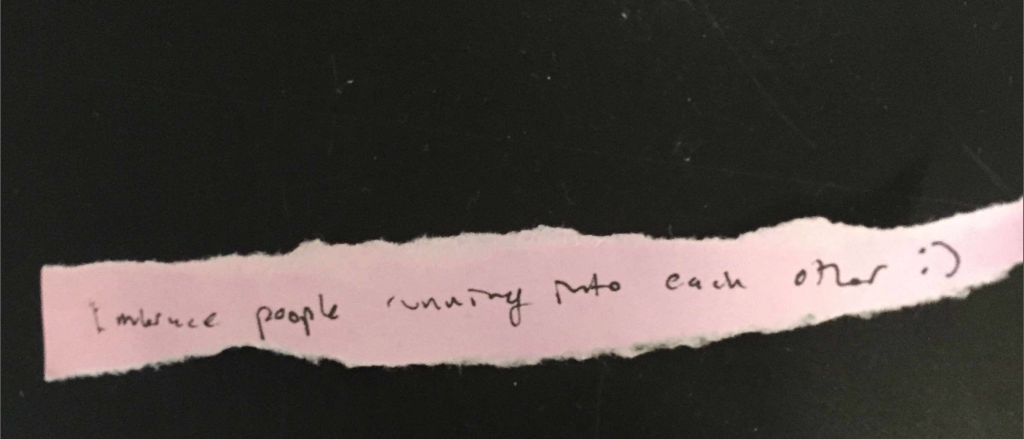

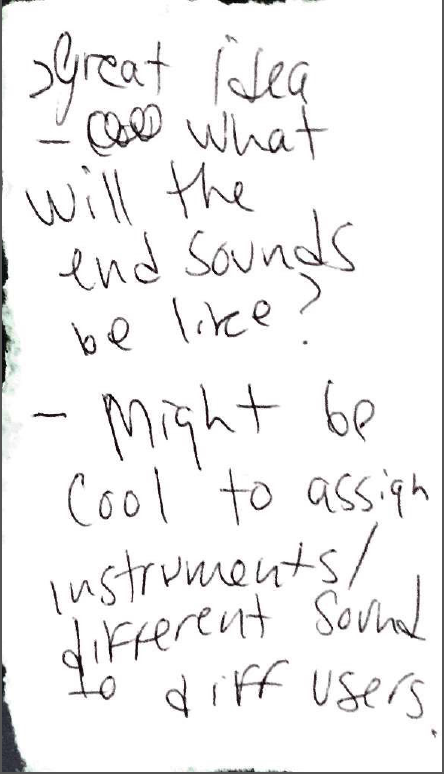

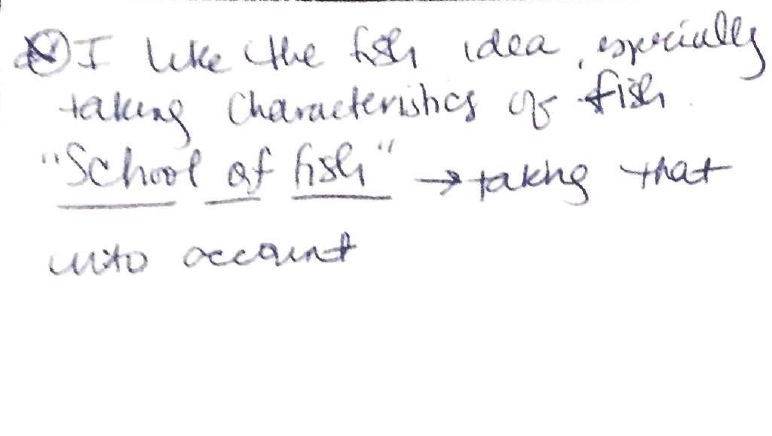

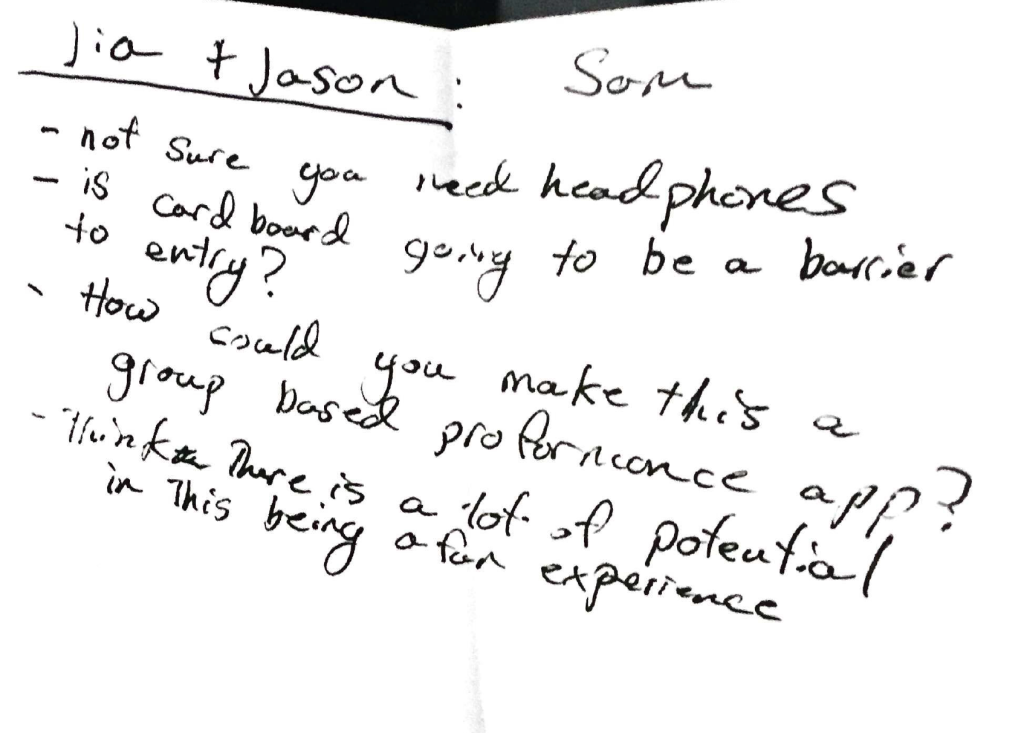

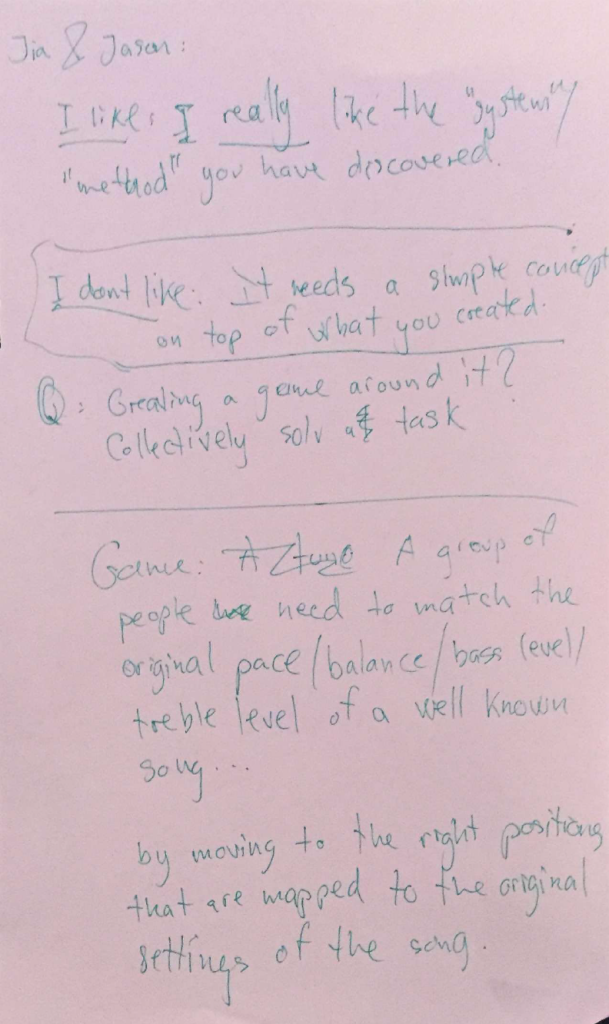

We received a lot of great feedback from the class after we presented the ideas. Here are some of the feedback notes we collected.